One of the fundamental functions of a product or service is solving user pain points and addressing their needs. Theoretically, we all get that, right? We all understand that a product needs to provide its users with value while also being intuitive and easy to use.

But how does one know that their product satisfies the criteria above? Usability testing is a practice that a wide array of companies need to start investing their time in.

To many, this is still an untrodden path. This is why we’ve decided to put together a comprehensive usability testing guide. We’ll discuss the entire process, how to ask better questions, user recruitment, tools you need for usability testing, and much much more.

Let’s get right into it!

What is usability testing?

Before we start exploring the subtleties of the practice, let’s look into its fundamental functions first.

What does this type of testing aim to achieve in the first place? Essentially, its goal is to assess how usable a particular piece of design is.

Generally speaking, usability testing comes in two types: moderated and unmoderated. Moderated sessions are guided by a researcher or a designer, while the unmoderated ones rely on users’ own unassisted efforts.

Moderated tests are an excellent choice if you want to observe users interact with prototypes in real-time. This approach is more goal-oriented — it lets you confirm or disconfirm existing hypotheses with more confidence.

On the other hand, unmoderated usability tests are convenient when working with a substantial pool of subjects. A large number of participants allows you to identify a broader spectrum of issues and points of view.

However, it’s important to underline that testing isn’t that black and white. It’s best to look at this practice as a spectrum between moderated and unmoderated testing.

Sometimes, during unmoderated sessions, we like to nudge our subjects into the right direction through mild moderation when necessary.

Why do you need usability testing?

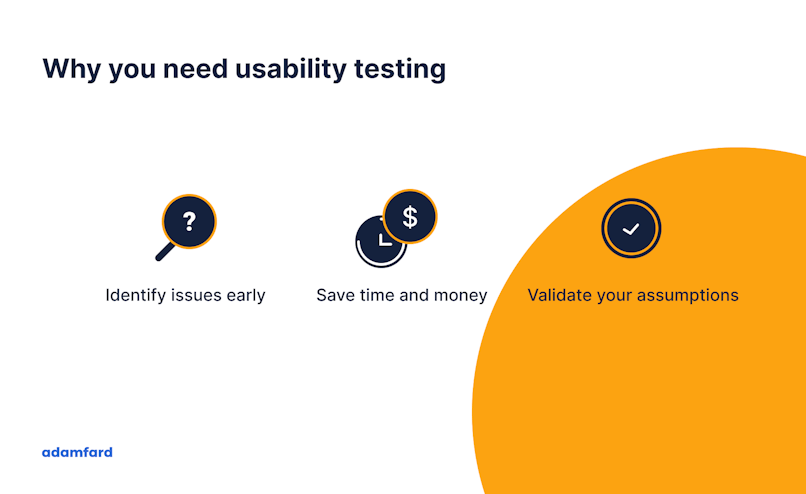

Testing our prototypes can provide us with a wide array of insights. Fundamentally, it helps us spot flaws in our designs and identify potential solutions to the issues we’ve uncovered.

We learn about the parts of our product that confuse or frustrate our users. By disregarding this step, we’re opening up to the possibility of releasing a product that causes too much friction. As a result, you’re increasing the chances of an unsuccessful release.

We can boil down the main benefits of usability testing to the following:

It allows us to identify usability issues early in the design process. As a result, we can address them promptly. Fixing usability problems in a live product is considerably more expensive and time-consuming. It puts your product at risk and your company through unnecessary financial stress;

It validates the product and solution concepts. Plus, it helps us answer some essential questions about the product: “Is it something users need?” and “Is the current design solution the optimal way for users to go about solving this problem?”;

Not only does testing help us spot problems, but it also allows us to find better solutions quicker. The insight we extract from these tests will enable us to quickly brainstorm, prototype, and validate concepts at the very early stages;

It’s important to also mention the things that this type of testing is not suitable for:

Identifying the emotions and associations that arise from your prototypes;

Validating desirability;

Identifying market demand;

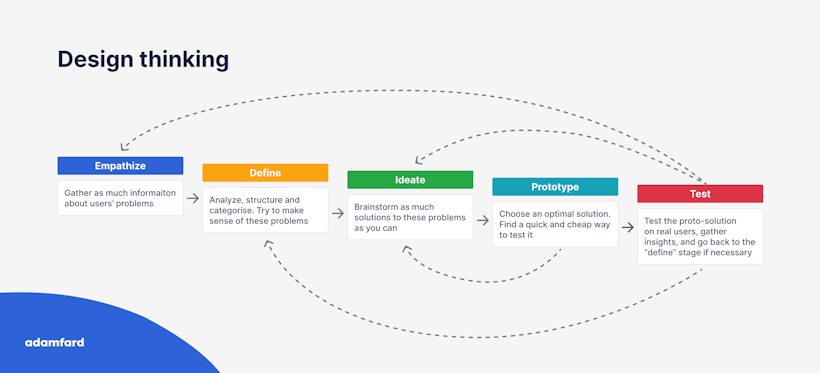

The grand scheme of things

Let’s take a bird’s eye view of the Design Thinking model to better understand the value usability testing brings to the table. Below, you’ll find an illustration of how agile ideas should be executed.

Let’s do a quick recap of the process:

We start by gaining an empathetic understanding of the user’s problem we’re trying to solve via design;

Once we’ve identified the problem, it’s essential to define it as best as we can;

We brainstorm a broad spectrum of solutions to that problem;

We then shortlist the most feasible and practical solutions. Further, we leverage these solutions to create rough prototypes with them in mind;

In turn, these prototypes are then tested on actual users to validate our solutions. This brings us to usability testing. This is one of the ways you validate your design;

When should it be conducted?

Usability testing is useful in multiple phases of the design process and many contexts. What’s certain is that it should be conducted early on and at least a few times—as a result, ensuring that our decisions are founded in empirical evidence.

The way we see it, it’s essential to distance testing from simply fishing for bugs later in the design process. That’s QA, not UX.

The most valuable part of it isn’t just identifying problems. Instead, we’re looking to learn from our mistakes and use this information to create superior iterations of our designs.

Here are a few common scenarios when testing usability is essential:

Any time we develop a prototype for a solution;

At the beginning of a project that has a legacy design. This allows us to get an idea of the initial benchmarks;

Validating our assumptions about metrics. When your tools indicate unsatisfactory metrics, and there could be multiple (often conflicting) reasons for it — usability testing sessions help us identify the root cause of the problem;

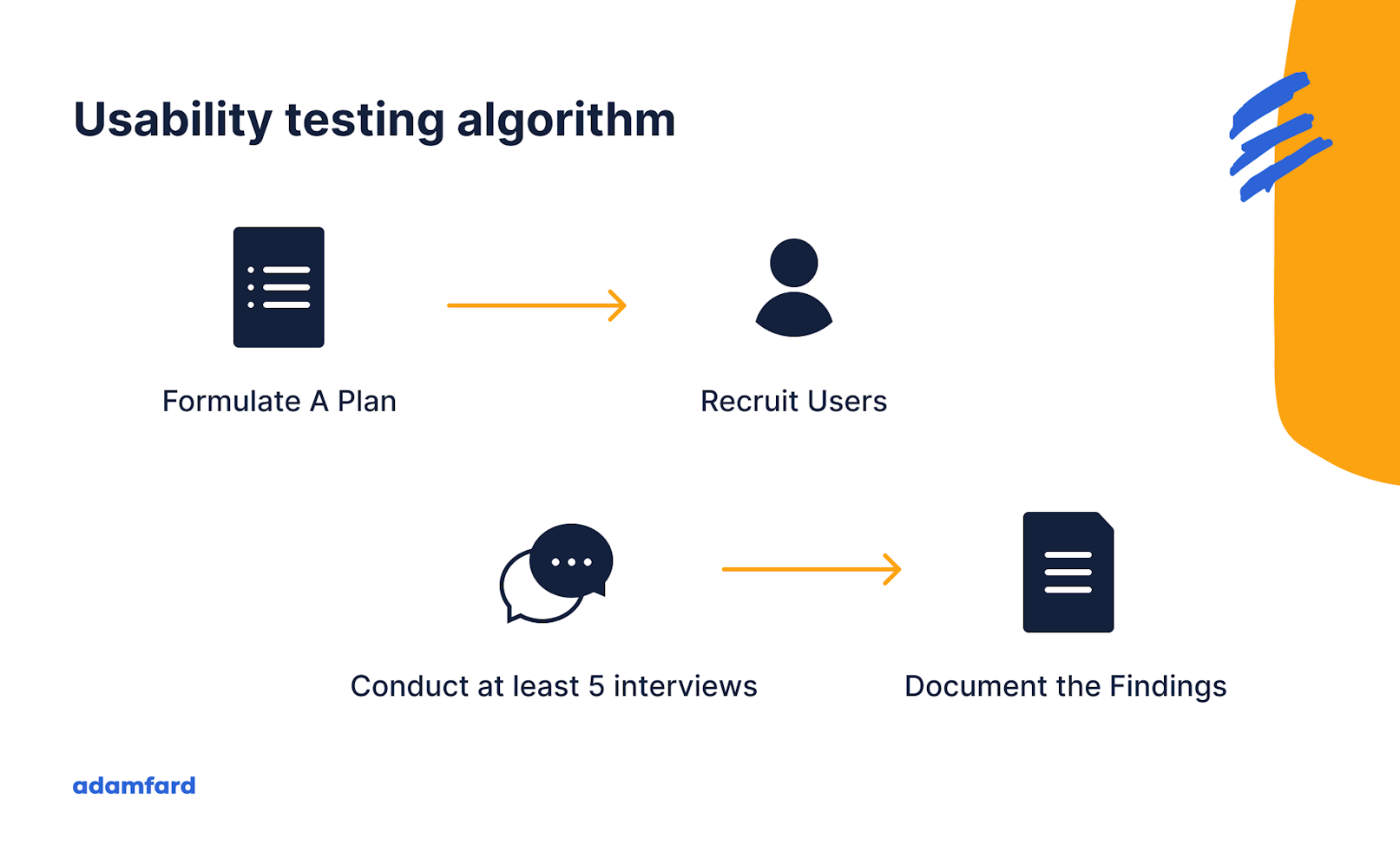

Usability Testing Algorithm

One of the most critical components of a successful usability assessment is a detailed plan. This document will allow you to prescribe the goals, number of participants, scenarios, and metrics for your usability testing sessions.

Traditionally, usability experts collect this information from the product owner and or other stakeholders. As a result, experts draft a preliminary version of the usability testing plan and submit it for feedback. As it is customary in UX circles, most processes are iterative.

Let’s take a more detailed look at the process of drafting a test plan.

1. Formulate a plan

While running usability tests without a clear scope can provide us with some insight, it can also end up being a burden.

Start with your goals

Tests that don’t seek to validate or invalidate specific hypotheses can often produce lots of chaotic data. As a result, we fail to connect the dots due to the incoherent nature of the information we’ve collected.

Start by establishing clear goals and identify the hypotheses you’re looking to test.

Flesh out your hypotheses

It can be argued that the quality of our usability assessment partly depends on the quality of the hypotheses we’ve developed. Here are a few necessary steps we recommend going through when drafting them:

Bear in mind that a hypothesis is an assumption that can be tested;

Document your assumptions about the product’s usability. Think about the outcome of your test;

Formulate hypotheses based on your assumptions and their potential outcomes;

Establish the outcomes and metrics that will validate your hypotheses;

Test your hypotheses during usability sessions;

Create scripts with goals and hypotheses in mind

Once you’ve developed your hypotheses, use them to formulate your research questions. Try to break them down into logical divisions and write your questions around them. Here are a few essential things to keep in mind:

Make sure that your questions are specific and answerable. It’s imperative to eliminate any possible confusion and ambiguity in the subjects’ answers;

Your questions need to be rooted in your hypotheses and must address them directly. Asking questions that are remotely connected to them could make your analysis more complicated and less precise;

Ask as many questions related to a hypothesis as necessary. Clarity and precision are key;

Once formulated, make sure that the answers to all of your questions provide you with “the big picture.”;

Make sure that your questions leave little wiggle room. Qualitative research is often subject to bias. Provide subjects with the necessary guidance to make them comfortable with answering your questions truthfully;

Protip: It’s essential not to overwhelm users with too many questions. This is often the case when we commit to too many goals. By broadening the scope of your usability testing, you’re risking diminishing the quality of your data and insight.

2. Recruit your users

An important part of usability studies is working with users that are representative of your user personas. Focus on recruiting people that have a significant overlap in your potential user demographics. Look for similar goals, aspirations, attitudes, and so forth.

Failing to do so might result in misleading data, which renders your usability test pointless. Of course, this depends on the nature of your product as well. Specialized products demand a specialized audience and vice versa.

Where do I look for users?

There are multiple sources of subjects for your usability tests, depending the type of product you’re looking to test. Below, you’ll find the list of platforms we use to find suitable users for our tests:

Ideally, the product team should be able to help you find users. In turn, you could also ask whether the interviewed person has any acquaintances that fit your user profile. If that doesn’t work for you, consider the resources below;

Facebook and LinkedIn groups — works for both B2B and B2C;

Upwork — works better for B2C than B2B since business owners and stakeholders rarely look for odd jobs;

respondent.io — This tool is generally best-suited for finding busy users that are hard to come by;

userinterview.com — This tool boasts a large pool of potential respondents, making it easy to find just the ones you need. It should work for both B2B and B2C.

Do I need incentives?

Incentivizing your users is a common practice in usability testing.

Typically, the higher the requirements and more challenging they are to come by (CEOs, executive board members, senior software engineers, etc.), the higher the incentive you’d generally need to make it worthwhile.

Some platforms (such as Upwork or respondent.io) allow you to pay users directly, but you can also consider gift cards. Another common practice is providing your respondents with a premium version of your product if it’s something they might find useful.

The economics of test incentives

According to a study published in 2003 by the Nielsen Norman Group, the average per-user cost about $171. The economics of test incentives haven’t changed dramatically since then.

Sometimes companies don’t offer monetary incentives to participants. They can be remunerated with gift cards, coupons, and so forth.

The study above mentions that the average compensation for external test subjects was about $65 per hour. The United States West Coast averages at little over $80 per hour.

The discrepancy between “general population” and highly-qualified users is something worth noting as well. The latter are typically paid around $120 per hour, while the former are rewarded with approximately $30 per hour.

The advent of user testing platforms has significantly facilitated the companies’ access to users. As a result, allowing businesses to somewhat decrease prices for the users’ time.

3. Run usability interviews

Before you run the actual tests, there’s plenty of prep work that needs to be done. In this section, we’ll take a closer look at the optimal number of tests that need to be conducted, tools, questions, and so forth.

How many tests? How many people?

Back in 2000, the Nielsen Norman Group posted yet another essential article on strategizing usability testing. The piece reports on their findings on the optimal number of users necessary to test a product's usability.

“Elaborate usability tests are a waste of resources. The best results come from testing no more than 5 users and running as many small tests as you can afford.”

— Jakob Nielsen

A study conducted by Jakob Nielsen and Tom Landauer illustrates the number of usability issues you can identify with a varying number of users.

Their report suggests that once you reach a number of 3 users, you’ll be able to uncover over 60% of usability problems. Going above that will only result in diminishing returns — your ROI will continuously decrease.

By testing 5 people, you’ll be able to uncover 85% of usability issues, which is generally considered to be the sweet spot in terms of price-to-value ratio.

Rather than investing in one or two elaborate tests, approach design iteratively. We recommend running 5 tests for a given set of tasks.

This number provides you the most insight, with the least number of people. You could run more tests than that, but that could have a toll on your finances while providing you with little value.

What tools should I use?

There is great variety when it comes to tools. The type of products you’ll be using depends on the kind of tests you’re looking to conduct.

You can always opt for platforms like Maze and UserTesting that specialize specifically in testing and research. However, if that’s not essential — Zoom, Skype, Google Meet are a more cost-efficient option.

One vital feature that needs to be taken into account is the recording function. You’ll want to have your sessions recorded and saved to allow you to access them at all times, thus minimizing bias.

More importantly, it will allow you to better empathize with your users in the long run.

4. Remote or in-person interviews?

At the time of publishing this article, we’re still in the midst of the COVID-19 pandemic. So, it’s safe to assume that we won’t and shouldn’t be doing any in-person interviews anytime soon.

Let’s take a second to explore the benefits and drawbacks of remote and face-to-face testing.

In-person interviews

While lab testing typically demands more work, it’s considered to yield a broader range of data. The fact that you’re there with the user ensures that you can closely observe their body language and more subtle reactions. Remote testing has its limitations in this regard.

Respectively, this allows us to better understand our users’ behavior and dig for deeper insights. Plus, the fact that we’re in the user's immediate presence helps us ask the right questions at the right time.

Going remote

Given that we are a globally-distributed team at the Adam Fard Studio, we’re all for remote testing. We consider it more advantageous for a variety of reasons.

First off, it’s much easier to recruit users and run tests. Testing platforms provide companies with immense databases of users categorized in a wide array of parameters. Plus, the large size of the user bases allows you to conduct usability tests concurrently and around the clock.

Secondly, we believe that tests have to be conducted in a “natural environment,” which is specifically at a person’s device, not in a sterile lab.

Last but not least, it’s a more cost-effective way of conducting tests. There are simply fewer expenses compared to driving a person to an on-site lab. It takes up less of their time, which results in a smaller cost per conducted test.

5. What questions should I ask?

It’s important to underline that usability interviews are more than just the questions you ask during the test. Typically, interviewers need to ensure proper communication before and after the test as well.

Before the interview

“Tests” are generally a triggering word. We associate it with our knowledge or understanding of something being assessed. It’s imperative to stress for the participants that this is not the case. Underline the idea that you’re testing designs, not users. Make them feel comfortable by mentioning that there are no wrong answers.

We like to kick things off with some small talk. It allows users to relax and be more comfortable with the entire process.

There are a variety of useful questions that can be asked before the interview. Generally, they can be grouped into two categories: background and demographic questions.

The latter allows us to identify potential usability trends in various demographics, ages, genders, income groups, and so forth. Needless to say, we need to do it in a thoughtful and careful manner.

The former has to do with the users’ interaction with products similar to yours and their preferences regarding them.

During the interview

Given that you’re looking to learn about your users’ experience, there are two central things to keep in mind:

Don’t ask leading questions;

Ask questions that encourage open-ended answers;

Leading questions are extremely harmful to a usability test. It’s safe to say that they can pretty much invalidate your results, rendering the test pointless. To make sure that doesn’t happen, we should always invest enough time and effort to formulate questions that are neutral and open.

Here are a few examples of questions you should avoid:

What makes this experience good?

How intuitive was the interface?

Was the product copy easy to understand?

It’s common knowledge that people want to be polite to other people. Such loaded questions will involuntarily skew the users’ opinions on the experience. As a result, that will stop you from extracting real insight from the test.

Here are a few examples of questions we’d recommend asking your users:

What’s your opinion on the navigation within the product?

How did you feel about using this particular feature?

Would you prefer to perform this action differently?

What would you change in the product’s workflow?

After the interview

Once the test comes to an end, it’s always a good idea to ask some general questions about the person’s experience with the product. Feel free to ask more open-ended questions that will allow you to extract even more insight from the test.

Is there anything we didn’t ask you that you’d like to share your opinion on?

How did you like the experience in general on a scale from 1 to 10?

What are the things you enjoyed most/least about the product?

How long should the sessions last?

Generally, 30-40 minutes per session is a good ballpark. Try not to go beyond one hour. Fatigue can be a factor when it comes to the objectivity of the user’s experience. Furthermore, longer sessions typically result in greater amounts of data, making it more complicated to analyze.

Document your findings

If you’ve conducted a session that was recorded, go through it once again and document the answers, the user’s body language, etc. It’s essential to explore any linguistic and behavioral peculiarity that can bring you closer to more in-depth insight.

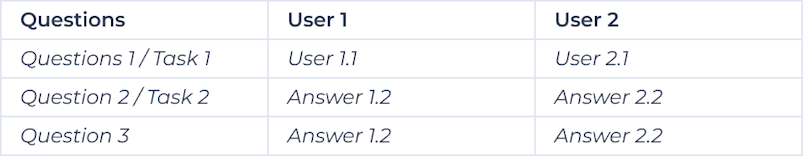

Using spreadsheets or tools like Miro can help you structure your findings in a meaningful manner. Consider structuring your findings as follows:

Plus, you can also extract quantitative data from usability tests by quantifying the frequency of certain events.

What’s next?

Now that the bulk of the work is done, it’s time to analyze the data you’ve collected and extract insight. At this stage, we typically create usability reports that allow us to communicate our findings and devise a plan of action.

Define the issues

Look into the data you’ve collected and define the most critical issues the users have come across. It’s always a good idea to complement the issues with a clear description, as well as when and where it had occurred.

Prioritize the issues

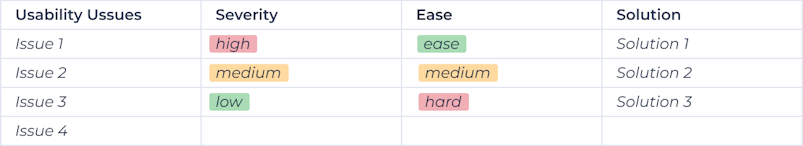

Not all usability problems are equal. This is the phase where you prioritize them based on their criticality. As a result, your team’s time will be invested responsibly by favoring the most pressing issues and pushing less important ones further in the backlog.

Consider prioritizing the issues as follows:

The bottom line

Usability testing plays a crucial role in creating an excellent user experience. Fortunately, more and more companies recognize its value, and it seems like this practice is gradually becoming the new norm.

However, as we’ve mentioned previously, conducting usability tests just for the sake of conducting them will yield little useful data.

By following the principles above, you’ll be able to get a better understanding of your users, their preferences, and the shortcomings in the current iteration of your design. As a result, you’ll be steadily moving towards creating a useful product that’s also a pleasure to use.

Good luck!

Usability Testing Checklist

Download our free usability testing checklist to empower your product with the knowledge that comes directly from your users:

Download Free Checklist